“The danger isn’t that AI is too powerful; it’s that we stop questioning it.”

Artificial intelligence in medicine is often described as revolutionary, capable of diagnosing disease, predicting deterioration, and automating once-human decisions. But I want to suggest a different lens, one drawn not from technology, but from history.

Let’s go back to ancient Greece.

There, leaders turned to the Oracle of Delphi not for definitive answers, but for permission. They faced uncertainty and sought a higher authority to guide them. The Oracle gave them that. But her pronouncements were ambiguous, shaped by intermediaries, and prone to misinterpretation.

Sound familiar?

Medicine’s modern Oracle

Today, AI occupies a strikingly similar role in our clinical landscape. Whether it’s a predictive algorithm in the ED or a large language model summarizing charts, we increasingly consult machines when we want clarity in complexity.

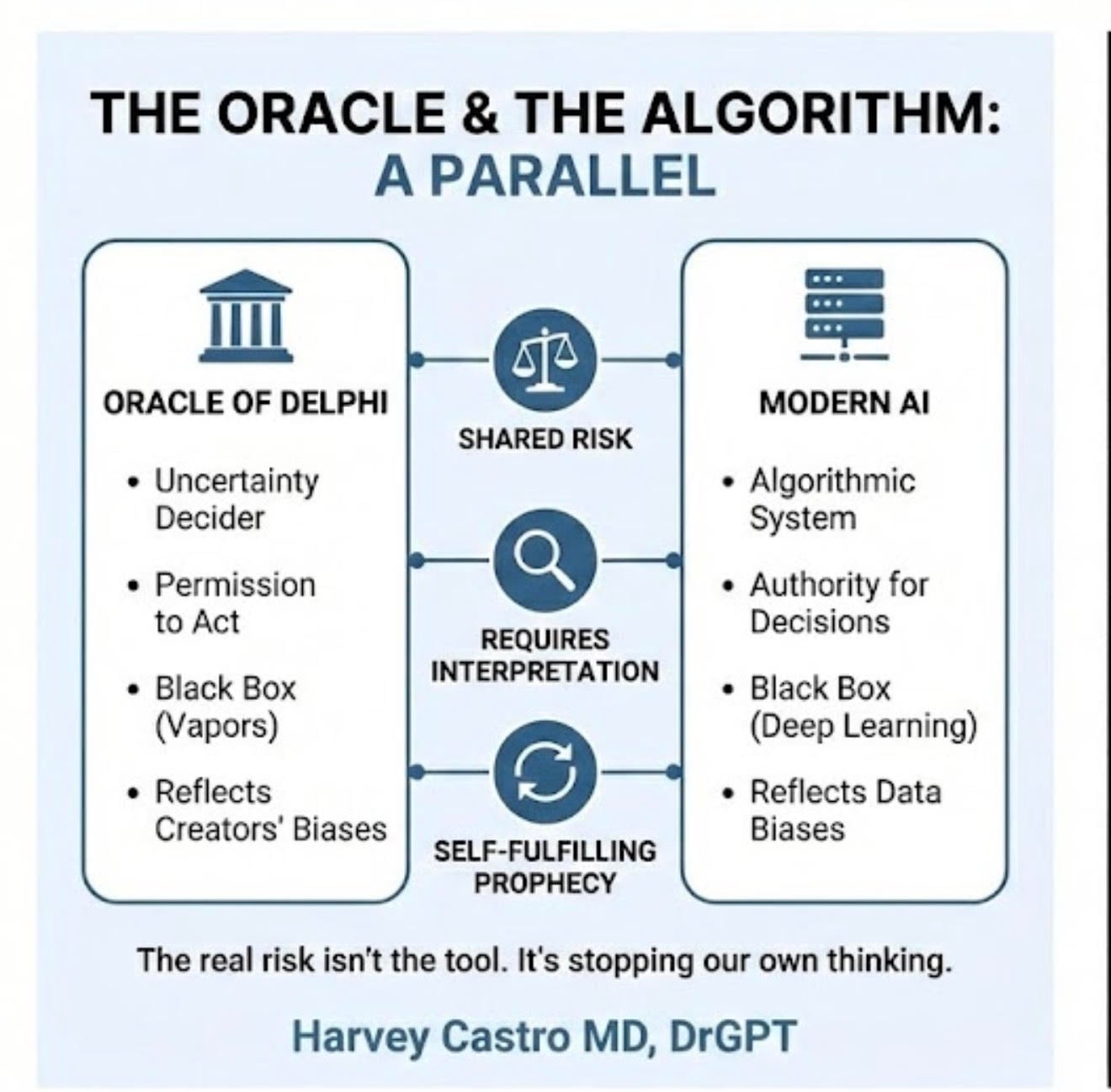

But here’s the catch: Both the Oracle and modern AI share five dangerous characteristics:

- Opacity: We often don’t fully understand how decisions are made.

- Bias: Both are shaped by human input data, culture, and assumptions.

- Interpretation risk: Outputs are only as good as the human interpreting them.

- Self-fulfilling outcomes: Predictions influence behavior, which reinforces the original prediction.

- Authority creep: The more we trust the tool, the greater the risk of losing our own clinical judgment.

The black box in the exam room

AI’s appeal often lies in its mystique. It can process millions of data points and spot patterns we might miss. But as physicians, we must remember: Insight without explainability is not wisdom.

When a black-box algorithm recommends a treatment or, worse, denies a claim without transparency, we must ask: On what basis? Was the training data diverse enough? Does the model generalize to my patient population? Are the metrics clinically relevant?

If we can’t answer those questions, should we be basing decisions on the output?

Bias isn’t just a bug, it’s a mirror.

Much like the Oracle, whose utterances were filtered through priests with their own agendas, AI reflects our own biases to us. We feed it historical data, and it faithfully reproduces historical inequities.

Algorithms may underdiagnose heart disease in women or stroke in Black patients. Risk prediction tools may overestimate readmission risk in safety-net hospitals, thereby influencing care delivery. And because these tools are branded as “objective,” we may be less likely to challenge them even when we should.

When prediction becomes prescription

AI doesn’t just reflect the future; it can shape it.

If a sepsis model flags a patient as high-risk, that patient may receive earlier antibiotics and closer monitoring. That’s good until we start treating the label, not the person. A poorly calibrated model might over-alert, leading to overtreatment. Or worse, under-alert, delaying care. Yet we still follow it because the machine said so.

This is how AI becomes a self-fulfilling prophecy.

The cognitive cost of outsourcing judgment

There’s emerging evidence that overreliance on AI correlates with declines in critical thinking, especially among younger clinicians. The term is cognitive offloading: We begin to let the machine think for us.

That’s convenient until it’s not.

When AI gets it wrong (and it will), will we be ready to challenge it? Or will we defer to the algorithm, even when our gut says otherwise? This is not just a technical issue. It’s a training issue. An ethical issue. A human issue.

Guidelines for responsible use

As someone who lives at the intersection of medicine and AI, I believe we can use these tools wisely if we remain the final decision-makers. Here’s how:

- Demand transparency: Know what data trained the model. Ask who validated it and on whom. Require explainability for clinical outputs.

- Challenge bias: Consider how historical inequities may influence predictions. Involve diverse stakeholders in model development and deployment.

- Preserve human oversight: Use AI as a second opinion, not a final word. Trust your clinical instincts and teach trainees to do the same.

- Track outcomes: Monitor the impact of AI-assisted decisions. Adjust workflows based on real-world performance, not just vendor promises.

We are the Oracle now

The inscription at Delphi read: “Know thyself.”

That advice is as relevant to physicians in the AI era as it was to ancient consultants of the divine. Knowing the limits of the machine and of ourselves is what keeps patients safe.

AI can and should support our decisions. But the moment we stop questioning, we stop practicing medicine.

So, no, AI is not the new Oracle. We are.

We carry the judgment, the responsibility, and the humanity that machines cannot replicate.

Harvey Castro is a physician, health care consultant, and serial entrepreneur with extensive experience in the health care industry. He can be reached on his websites, www.harveycastromd.com and ChatGPT Health, X @HarveycastroMD, Facebook, Instagram, and YouTube.

He is the author of Bing Copilot and Other LLM: Revolutionizing Healthcare With AI, Solving Infamous Cases with Artificial Intelligence, The AI-Driven Entrepreneur: Unlocking Entrepreneurial Success with Artificial Intelligence Strategies and Insights, ChatGPT and Healthcare: The Key To The New Future of Medicine, ChatGPT and Healthcare: Unlocking The Potential Of Patient Empowerment, Revolutionize Your Health and Fitness with ChatGPT’s Modern Weight Loss Hacks, Success Reinvention, and Apple Vision Healthcare Pioneers: A Community for Professionals & Patients.

![Stopping medication requires as much skill as starting it [PODCAST]](https://kevinmd.com/wp-content/uploads/The-Podcast-by-KevinMD-WideScreen-3000-px-4-190x100.jpg)

![Weaponizing food allergies in entertainment endangers lives [PODCAST]](https://kevinmd.com/wp-content/uploads/The-Podcast-by-KevinMD-WideScreen-3000-px-3-190x100.jpg)

![AI censorship threatens the lifeline of caregiver support [PODCAST]](https://kevinmd.com/wp-content/uploads/Design-2-190x100.jpg)